Deploying ML Models to the Edge using Azure DevOps

-

Renjith Ravindranathan

Renjith Ravindranathan

- Dev ops, Microsoft azure, Azure

- October 29, 2020

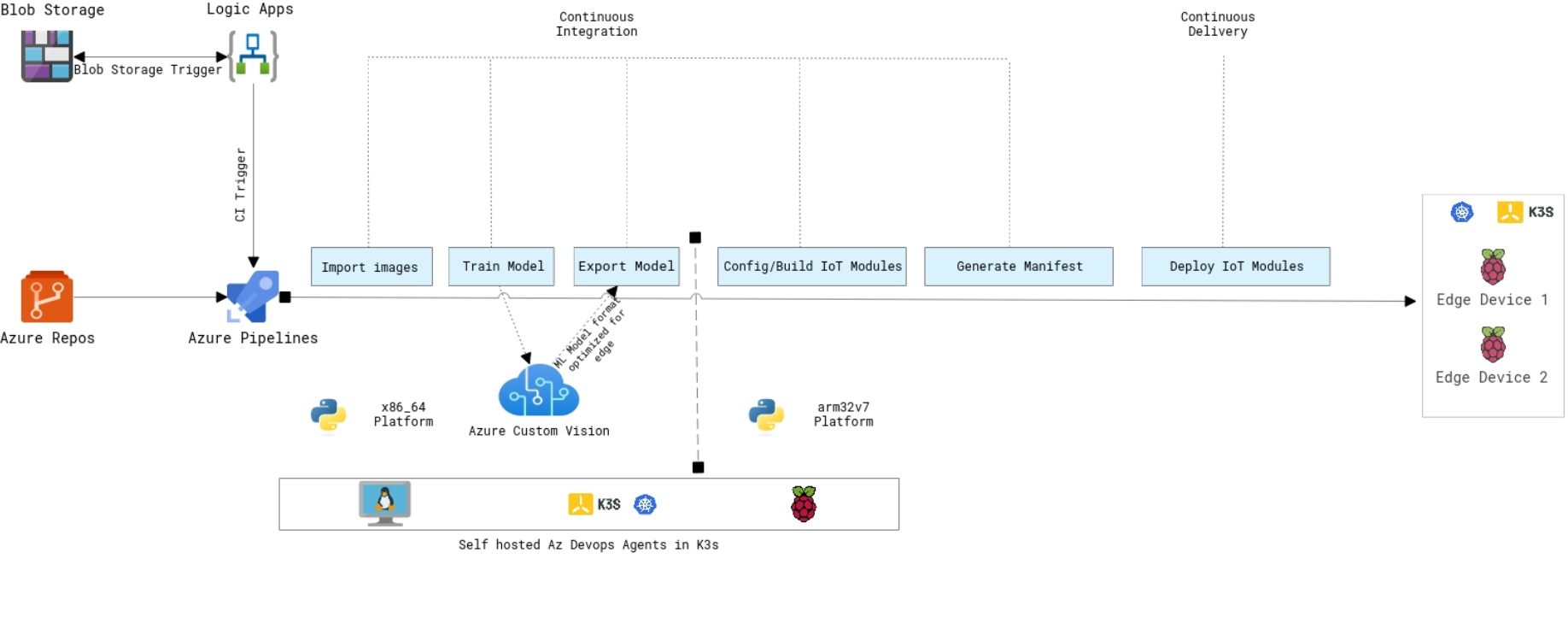

Training ML Models and exporting it in more optimized way for Edge device from scratch is quite challenging thing to do especially for a beginner in ML space. Interestingly Azure Cognitive Services will aid in heavy lifting half of the common problems such as Image Classification, Speech Recognition etc. So in this article, I will show you how I created a simple pipeline(kind of MLOps) that deploys the model to an Edge Device leveraging Azure IoT Modules and Azure DevOps Services.

For implementation, I have used the following resources

Cloud Services 1. Blob Storage – For storing images for ML training 2. Logic Apps – To respond Blob storage upload events and trigger a Post REST API call to Azure Pipelines 3. Cognitive Services – For training Images and generate a optimized model specifically for edge devices. Also leveraged speech services. 4. IoT Hub – To Register IoT edge devices and Modules(Programs) associated with it. 5. Azure VM – This will act as a Build Server, for building application in x86_64 platform. Containerized Az Devops Agents will be running inside this, orchestrated using K3s Kubernetes Distribution. Hardware 1. Raspberry Pi – For building Azure IoT modules and act as a edge device 2. USB Webcam – To identify objects 3. Speaker – To output voice

Software/Tools 1. Python 3.8 2. K3s Kubernetes distribution 3. Az IoT Edge Solution 4. Helm 3 5. Docker CE 6. Dockerhub

The Workflow Workflow starts when the images are uploaded to Azure Blob Storage. The folders inside it are categorized for the ease of image tagging programmatically. When there is an upload event, logic apps configured will get triggered and initiates a post REST API call to Azure Devops Services to trigger CI. The project is written in Python and the CI process will be executed using self hosted containerized Azure Devops Agents on K3s X86_64 platform. In the CI process, the images will be read, tagged and trained using Azure Custom Vision Service and an image classification model optimized for edge devices will be generated as output. Next process is to configure, manifest file generation and deployment of Azure IoT Modules , that is done using containerized Azure Devops Agents on K3s armv7 platform. The previously generated model will be used along with Azure IoT Modules, which will eventually get deployed to Raspberry Pi which will act as an edge device, running K3s Kubernetes cluster for ease of management of Az IoT modules. The modules deployed on the Raspberry pi will consume the video stream from the USB cam attached to the device and recognize the objects trained using the ML model and output the speech through the speaker. We can actually split the pipeline setup into 3 parts 1. Storage setup 2. Continuous Integration 3. Continuous Delivery

- Storage For the storage, we need to create a Blob Storage first and categorize folders inside for tagging. Please find the link for more information on the creation steps. My blob storage would look like below that is categorized images using the folder structure.

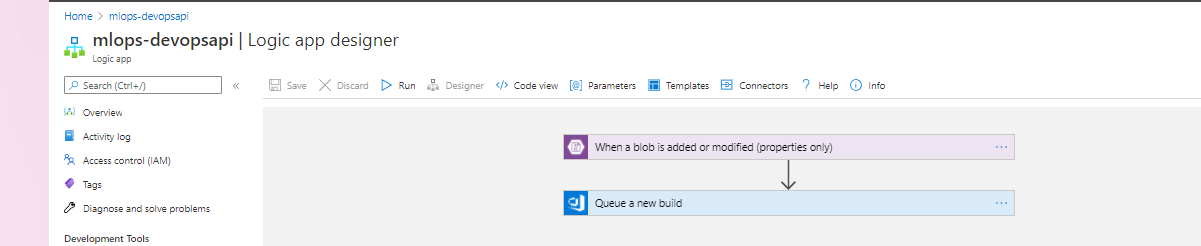

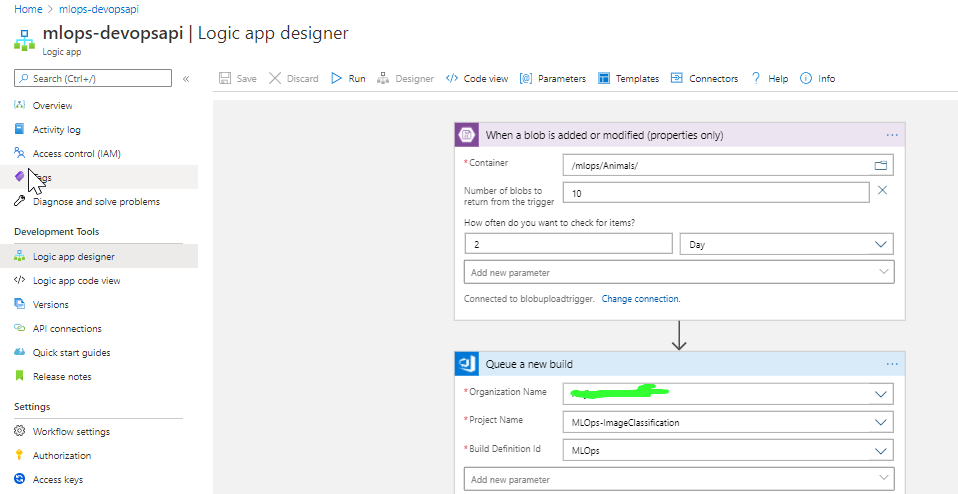

Once Storage is created next step is to create Logic Apps. Logic apps have workflows that can react to events such as deletion, upload etc. of files inside Blob storage. So I have created an event that will get triggered when images are uploaded in to the folders inside Blob storage and sequentially trigger a REST API call to queue build in Azure DevOps. Creation of the cluster is mentioned here in the link . My Logic Apps Designer view as below

Once Storage is created next step is to create Logic Apps. Logic apps have workflows that can react to events such as deletion, upload etc. of files inside Blob storage. So I have created an event that will get triggered when images are uploaded in to the folders inside Blob storage and sequentially trigger a REST API call to queue build in Azure DevOps. Creation of the cluster is mentioned here in the link . My Logic Apps Designer view as below

Note – There is another way to react to the Blob upload event asynchronously using Azure functions. Logic apps seems nice, so I chose it

Note – There is another way to react to the Blob upload event asynchronously using Azure functions. Logic apps seems nice, so I chose it - Continuous integration The rest API call from the previous part will queue the build which result in executing a series to steps. 1. Import images 2. Train model 3. Export model 4. Configure/Build Az IoT Modules Before we jump into CI/CD part, the build infrastructure needs to be setup. I am using a containerized version of Azure DevOps Agents forged with necessary build tools , libraries and runtimes. And for orchestration and desired state management of containers, I went with K3s Kubernetes distribution. To setup K3s on your machine, you may follow the instructions here . Please note that we also required to setup agents for two different platform, one for x86_64(Azure VM), for training the model as its resource intensive and arm for building the IoT modules and generating the manifest for the arm32(Raspberry Pi) – to avoid cross compilation time. The docker images can be found here – x86_64 and arm K3s manifest for the Az Devops Agents

| apiVersion: apps/v1 | |

| kind: Deployment | |

| metadata: | |

| name: aziot-devops-agent-deployment | |

| labels: | |

| app: aziot-devops-agent-deployment | |

| spec: | |

| replicas: 2 | |

| selector: | |

| matchLabels: | |

| app: aziot-devops-agent-deployment | |

| template: | |

| metadata: | |

| labels: | |

| app: aziot-devops-agent-deployment | |

| spec: | |

| securityContext: | |

| fsGroup: 995 # Group ID of docker group | |

| containers: | |

| – name: dockeragent | |

| image: mysticrenji/azdevopsagentsiotedgeamd64:latest | |

| env: | |

| – name: AZP_TOKEN | |

| valueFrom: | |

| secretKeyRef: | |

| name: azdo-secret-config | |

| key: AZP_TOKEN | |

| – name: AZP_URL | |

| valueFrom: | |

| configMapKeyRef: | |

| name: azdo-environment-config | |

| key: AZP_URL | |

| – name: AZP_AGENT_NAME | |

| valueFrom: | |

| fieldRef: | |

| fieldPath: metadata.name | |

| – name: AZP_POOL | |

| valueFrom: | |

| configMapKeyRef: | |

| name: azdo-environment-config | |

| key: AZP_POOL | |

| volumeMounts: | |

| – name: dockersock | |

| mountPath: "/var/run/docker.sock" | |

| volumes: | |

| – name: dockersock | |

| hostPath: | |

| path: /var/run/docker.sock | |

| — | |

| apiVersion: v1 | |

| kind: Secret | |

| metadata: | |

| name: azdo-secret-config | |

| data: | |

| AZP_TOKEN: |

|

| — | |

| apiVersion: v1 | |

| kind: ConfigMap | |

| metadata: | |

| name: azdo-environment-config | |

| data: | |

| AZP_URL: https://dev.azure.com/ |

|

| AZP_AGENT_NAME: |

|

| AZP_POOL: |

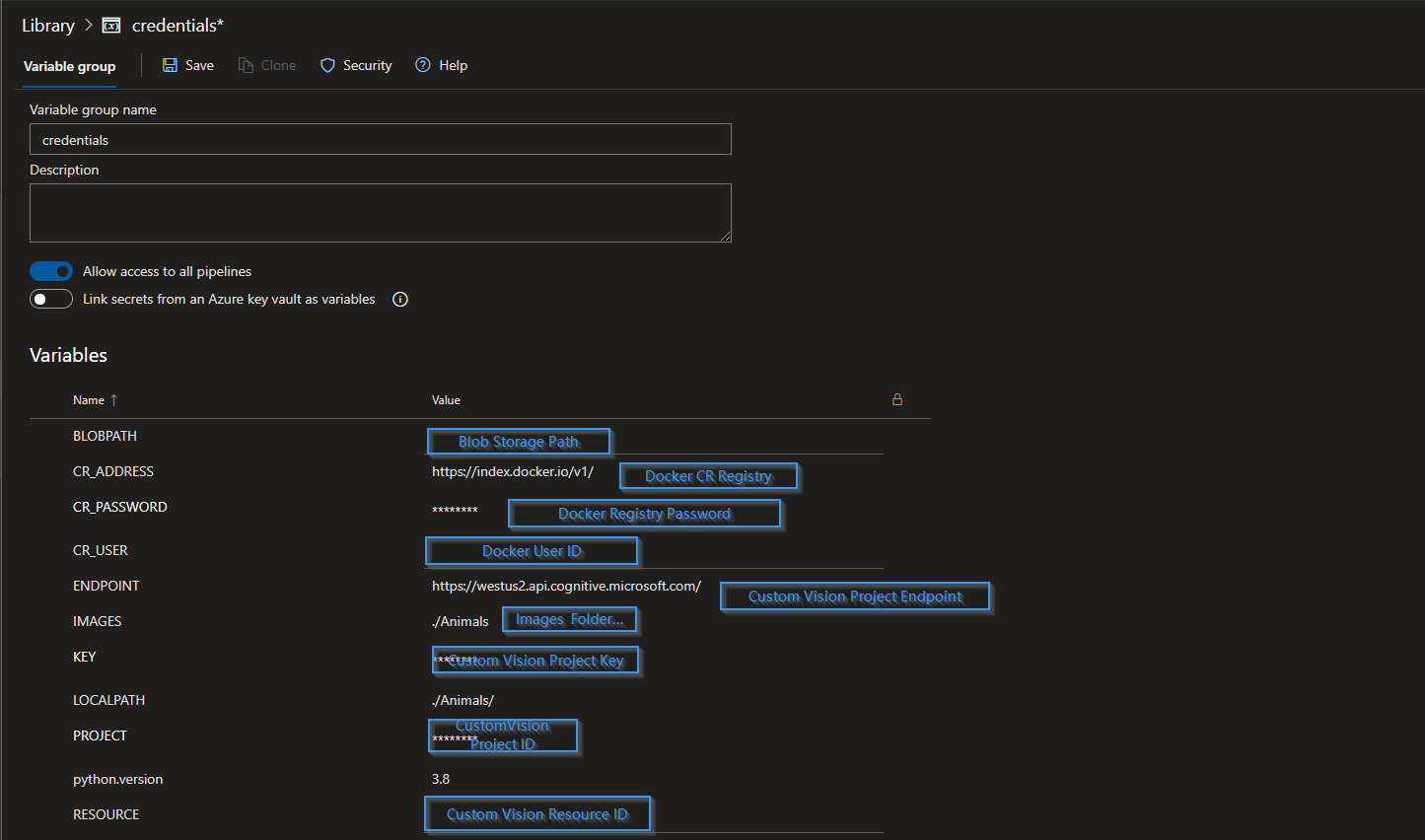

When the Az Devops agents are configured, next we can configure custom vision, speech services and IoT module. I borrowed some of the good stuff from git repo by Dave Glover. He clearly takes you through the basic steps for setting up cognitive cloud services and configuration in the Raspberry Pi. Once you complete the project setup , next step is configure the CI/CD pipelines. Variables The Azure pipelines is of YAML format and there is a variable group containing credentials are referenced. The group needs to be created before triggering the pipeline otherwise it would be end up in error.

2.1 Import images – This task download the images from Blob storage to the build server 2.2 Train Model – Images will scanned and tagged depends on the folder name and push it to custom vision project. 2.3 Export Model – When the ML model training is complete, it gets exported out in mobile optimized format. 2.4 Configure/Build Az IoT Modules – In the step the IoT modules will be build based on the configuration along the exported model from the previous step. This process executes on arm32v7 platform (Raspberry pi). Please check out my earlier post for detailed walkthrough on building IoT Modules. 2.5 Generate manifest – It takes the output deployment file (deployment.json) from previous step and generate the manifest file based on the platform mentioned. Finally the manifest file is taken as build artifact and then pushed to Azure Pipelines – artifact location.

- Continuous Delivery If you take edge infrastructure , there will be multiple devices sending telemetric data to the edge server or to the cloud for computing. Managing and monitoring the devices will get troublesome if you have many devices and making communication channel secure is a challenging task nowadays. This is where IoT Hub comes for rescue. Azure IoT Hub is a fully managed service that enables reliable and secure bidirectional communications between millions of IoT devices and a solution back end. It also provide automatic device provisioning, maintenance and sunsetting of devices as well. Please note that it is minimum requirement to Install Azure IoT Edge Runtime on the edge device to talk with the Azure IoT Hub. The instructions for installing the runtime is mentioned here . In the CD part, it takes the deployment manifest file from the artifact location and deploy the modules mentioned in the file to the Az IoT Hub. Az IoT Hub then communicates back to the edge device registered with it and pull the new modules from the docker registry. For this article, I have setup the edge infrastructure bit differently. MS team recently announced the capability of embedding IoT Edge resources into Kubernetes(beta version). This way you can monitor the health of the running modules and device from Kubernetes itself. Please follow the below steps for the same.

| #Install Helm | |

| curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash | |

| kubectl create serviceaccount tiller –namespace kube-system | |

| kubectl create -f tiller-clusterrolebinding.yaml | |

| #tiller-clusterrolebinding.yaml – Save file and create tiller | |

| kind: ClusterRoleBinding | |

| apiVersion: rbac.authorization.k8s.io/v1beta1 | |

| metadata: | |

| name: tiller-clusterrolebinding | |

| subjects: | |

| – kind: ServiceAccount | |

| name: tiller | |

| namespace: kube-system | |

| roleRef: | |

| kind: ClusterRole | |

| name: cluster-admin | |

| apiGroup: "" | |

| #Helm repo for IoT Edge | |

| helm install –repo https://edgek8s.blob.core.windows.net/staging \ | |

| edge-crd \ | |

| edge-kubernetes-crd | |

| # Create namespace for Edge Device | |

| kubectl create namespace raspi-master | |

| # Install and run modules on Devices | |

| helm install –repo https://edgek8s.blob.core.windows.net/staging \ | |

| raspi-master \ | |

| edge-kubernetes \ | |

| –namespace raspi-master \ | |

| –set "provisioning.deviceConnectionString= |

|

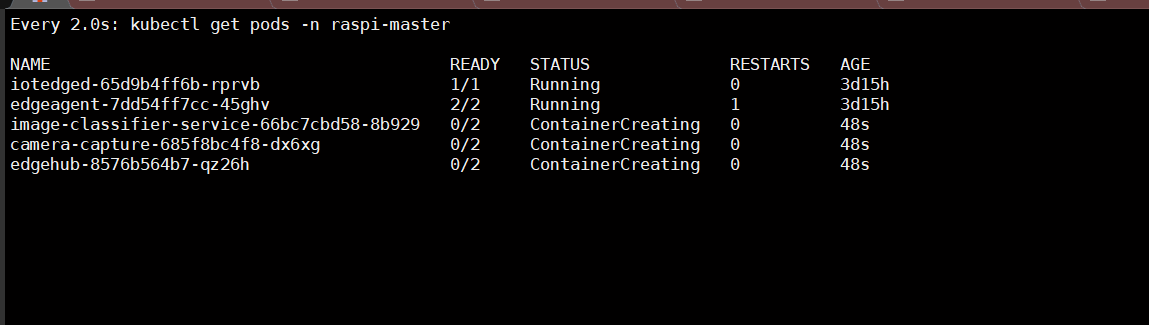

After the infra has been setup and modules are deployed by CD , you can see the modules and edge runtimes slowly appearing in the Kubernetes cluster. You may find more details on customizing IoT modules in this link .

Kindly try this out and feedbacks are always welcome!. References Github Re-shared the post from the my blog

Kindly try this out and feedbacks are always welcome!. References Github Re-shared the post from the my blog

Renjith Ravindranathan

A DevOps engineer by profession, dad, traveler and more interestingly to tweak around stuff inside memory constrained devices during spare time.

Note

Disclaimer: The views expressed and the content shared in all published articles on this website are solely those of the respective authors, and they do not necessarily reflect the views of the author’s employer or the techbeatly platform. We strive to ensure the accuracy and validity of the content published on our website. However, we cannot guarantee the absolute correctness or completeness of the information provided. It is the responsibility of the readers and users of this website to verify the accuracy and appropriateness of any information or opinions expressed within the articles. If you come across any content that you believe to be incorrect or invalid, please contact us immediately so that we can address the issue promptly.